Why Should I Care About Queuing?

Queuing in the application server is usually an indicator of a performance problem, rather than a problem in its own right. Requests will back up on the inbound queue because the application server cannot process them as fast as they arrive. This is usually seen on the APPQ which is serviced by the PSAPPSRV process, but applies to other server processes too. Common causes include (but are not limited to):- Poor performance of either SQL on the database or PeopleCode executed within the application server is extending service duration

- The application server domain is undersized for the load. Increasing the number of application server domains or application server process may be appropriate. However, before increasing the number of server process it is necessary to ensure that the physical server has sufficient memory and CPU to support the domain (if the application server CPU is overloaded then requests move from the Tuxedo queues to the operating system run queue).

- The application server has too many server processes per queue causing contention in the systems calls that enqueue and dequeue requests to and from IPC queue structure. A queue with more than 8-10 application server processes can exhibit this contention. There will be a queue of inbound requests, but not all the server processes will be non-idle.

What you do about queuing depends on the circumstances, but it is something that you do want to know about.

3 Ways to Measure Application Server Queuing

There are a number of ways to detect queuing in Tuxedo- Direct measurement of the Tuxedo domain using the tmadmin command-line interface. A long time ago I wrote a shell script tuxmon.sh. It periodically runs the printqueue and printserver commands on an application server and extracts comma separated data to a flat that can then be loaded into a database. It would have to be configured for each domain in a system.

- Direct Measurement with PeopleSoft Performance Monitor (PPM). Events 301 and 302 simulate the printqueue and printserver commands. However, event 301 only works from PT8.54 (and at the time of writing I am working on a PT8.53 system). Even then, the measurements would only be taken once per event cycle, which defaults to every 5 minutes. I wouldn't recommend increasing the sample frequency, so this will only ever be quite a coarse measurement.

- Indirect Measurement from sampled PPM transactions. Although includes time spent on the return queue and to unpack the Tuxedo message. This technique is what the rest of this article is about.

Indirectly Measuring Application Server Queuing from Transactional Data

Every PIA and Portal request includes a Jolt call made by the PeopleSoft servlet to the domain. The Jolt call is instrumented in PPM as transaction 115. Various layers in the application server are instrumented in PPM, and the highest point is transaction 400 which where the service enters the PeopleSoft application server code. Transaction 400 is always the immediate child of transaction 115. The difference in the duration of these transactions is the duration of the following operations:- Transmit the message across the network from the web server to the JSH. There is a persistent TCP socket connection.

- To enqueue the message on the APPQ queue (including writing the message to disk if it cannot fit on the queue).

- Time spent in the queue

- To dequeue the message from the queue (including reading the message back from disk it was written there).

- To unpack the Tuxedo message and pass the information to the service function

- And then repeat the process for the return message back to the web server via the JSH queue (which is not shown in tmadmin)

Some simple arithmetic can convert this duration into an average queue length. A queue length of n means that n requests are waiting in the queue. Each second there are n seconds of queue time. So the number of seconds per second of queue time is the same as the queue length.

I can take all the sampled transactions in a given time period and aggregate the time spent between transactions 115 and 400. I must multiply it by the sampling ratio, and then divide it by the duration of the time period for which I am aggregating it. That gives me the average queue length for that period.

This query aggregates queue time across all application server domains in each system. It would be easy to examine a specific application server, web server or time period.

WITH c AS (

SELECT B.DBNAME, b.pm_sampling_rate

, TRUNC(c115.pm_agent_Strt_dttm,'mi') pm_agent_dttm

, A115.PM_DOMAIN_NAME web_domain_name

, SUBSTR(A400.PM_HOST_PORT,1,INSTR(A400.PM_HOST_PORT,':')-1) PM_tux_HOST

, SUBSTR(A400.PM_HOST_PORT,INSTR(A400.PM_HOST_PORT,':')+1) PM_tux_PORT

, A400.PM_DOMAIN_NAME tux_domain_name

, (C115.pm_trans_duration-C400.pm_trans_duration)/1000 qtime

FROM PSPMAGENT A115 /*Web server details*/

, PSPMAGENT A400 /*Application server details*/

, PSPMSYSDEFN B

, PSPMTRANSHIST C115 /*Jolt transaction*/

, PSPMTRANSHIST C400 /*Tuxedo transaction*/

WHERE A115.PM_SYSTEMID = B.PM_SYSTEMID

AND A115.PM_AGENT_INACTIVE = 'N'

AND C115.PM_AGENTID = A115.PM_AGENTID

AND C115.PM_TRANS_DEFN_SET=1

AND C115.PM_TRANS_DEFN_ID=115

AND C115.pm_trans_status = '1' /*valid transaction only*/

--

AND A400.PM_SYSTEMID = B.PM_SYSTEMID

AND A400.PM_AGENT_INACTIVE = 'N'

AND C400.PM_AGENTID = A400.PM_AGENTID

AND C400.PM_TRANS_DEFN_SET=1

AND C400.PM_TRANS_DEFN_ID=400

AND C400.pm_trans_status = '1' /*valid transaction only*/

--

AND C115.PM_INSTANCE_ID = C400.PM_PARENT_INST_ID /*parent-child relationship*/

AND C115.pm_trans_duration >= C400.pm_trans_duration

), x as (

SELECT dbname, pm_agent_dttm

, AVG(qtime) avg_qtime

, MAX(qtime) max_qtime

, c.pm_sampling_rate*sum(qtime)/60 avg_qlen

, c.pm_sampling_rate*count(*) num_services

FROM c

GROUP BY dbname, pm_agent_dttm, pm_sampling_rate

)

SELECT * FROM x

ORDER BY dbname, pm_agent_dttm

- Transactions are aggregated per minute, so the queue time is divided by 60 at the end of the calculation because we are measuring time in seconds.

Is this calculation and assumption reasonable?

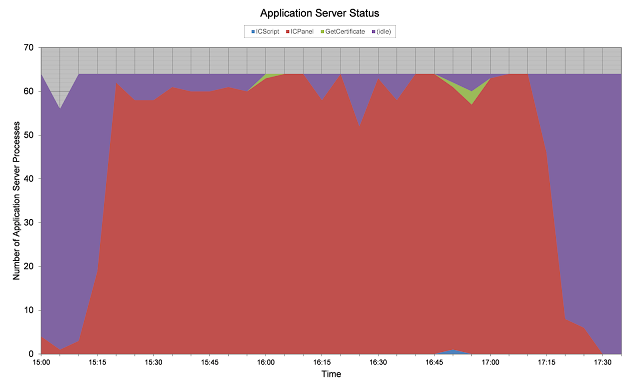

The best way to validate this approach would be to measure queuing directly using tmadmin. I could also try this on a PT8.54 system where event 301 will report the queuing. This will have to wait for a future opportunity.However, I can compare queuing with the number of busy application servers at reported by PPM event 302 for the CRM database. Around 16:28 queuing all but disappears. We can see that there were a few idle application servers which is consistent with the queue being cleared. Later the queuing comes back, and most of the application servers are busy again. So it looks reasonable.

2 comments :

You have to set PeopleSoft Performance Monitor up so that it is collecting transaction data at standard filter level. There is a whole PeopleBook dedicated to PPM. The data will go to the monitoring system, not the monitored system.

There are also a number of presentations on my website 'www.go-faster.co.uk', and some other postings on this blog that deal with optimising PPM.

Nice Blog! Thanks for sharing such post.

Post a Comment